Same cat chapters

Chapter details

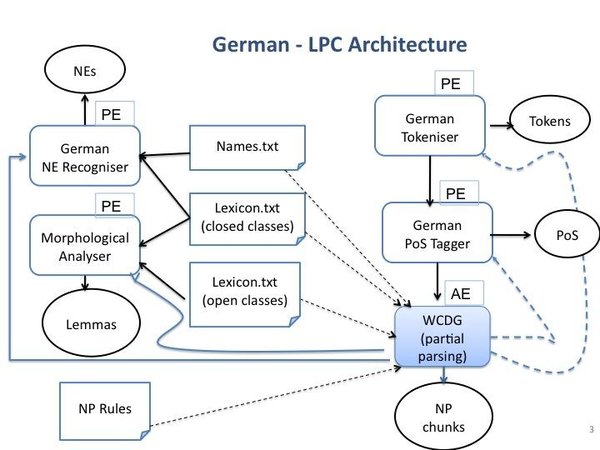

The language processing chain for German relies on two types of components:

- modules, belonging to the Weighted Constraint Dependency Parser, developed at the University of Hamburg (http://nats-www.informatik.uni-hamburg.de/view/CDG/WebHome)

- open source modules, already integrated in the GATE Architectural Framework (http://gate.ac.uk)

The German LPC system general architecture

We used the main components of the WCDG as follows:

- the tokeniser was modified in order to also recognize sentence boundaries

- the PoS tagger gives a first prediction of a possible Part of Speech with a certain probability.

- WCDG is launched under a time constraint and following the results, the PoS or token boundaries are corrected (especially verb particles vs. prepositions)

- Lemmas and Proper Names are extracted, based on the lexical resource included in the WCDG

The architecture of the German LPC is shown below.

Each component is shortly described:

- Sentence splitter and tokeniser - The sentence splitter and tokeniser is a Perl programme, based on regular expression. The programme is based on a dictionary of Abbreviations, specific for German. More empty lines between sentences are ignored.

- PoS Tagger - The PoS Tagger is an open source reimplementation of the TnT Tagger (http://www.coli.uni-sb.de/~thorsten/tnt/). The TnT Tagger is based on second order Markov models, thus looking two words into the past. The states of the model represent tags, outputs represent the words, and transition probabilities depend on the states which consist of pairs of tags in this case. The TnT tagger is a trigram tagger where the probability of a tag depends on the previous two tags. The German Model is trained on the NEGRA Corpus (http://www.coli.uni-saarland.de/projects/sfb378/negra-corpus/negra-corpus.html). It uses the Stuttgart-Tübingen Tagset (STTS). In order to se the list of the used tags please refer to (http://www.coli.uni-saarland.de/projects/sfb378/negra-corpus/stts.asc) The input of the PoS tagger is one token per line. The output is one token followed by one or more pairs of (PoS probability) separated by white spaces.

- Lemmatiser - As mentioned the WCDG system is based on a full form lexicon consisting of more parts (files) as follows: Nomen.txt: Nouns declared explicitly, approx. 27500 Entries, each Noun is classified in one of 38 declination classes, additionally following features are specified: sg: singulare tantum, (no plural forms possible) pl: plurale tantum (no singular forms possible) sto: nouns declaring materials The entries are ordered alphabetically and grouped like Nouns with counting meaning, nouns which define objects etc. Adjectives.txt (5800 entries), similarly constructed, Verbes.txt (8670 entries). Lexicon.cdg containing all closed word classed as well as patterns for digits (in Roman and Arabic dates). Based on this files dedicated PERL programmes take as input a word an produce its morphological analysis. One can specify also as input the word and its PoS. In this case the search space is restricted and only the entries corresponding to the given PoS is selected. This morphological analysis is used in order to select the features for the Lemmatiser of the German LPC.

- NP-Chunker - The WCDG is a dependency parser so no constituent structures are produced. However we can use the results produced by the WCDG in order to produce NP Constituents., by defining rules of combining PoS belonging to the same sub-dependency tree. Our NP-Chunker is based on a set of about 10 rules.

- NE Recogniser - The German LPC use two NE recognisers. The first one is based on the File Names.txt included in the WCDG system, containing 30100 first and family names, explicitly declared. The second one is the open source MunPex (Multilingual NER) http://www.semanticsoftware. info/munpex , variant for German. This is a component developed for GATE-Framework and adapted to the UIMA Framework.

ATLAS (Applied Technology for Language-Aided CMS) is a project funded by the European Commission under the CIP ICT Policy Support Programme.